We’ve grown accustomed to calling things “computers” that, until recently, were just everyday objects. Cars are the best-known example. With more code than a fighter jet and more silicon than steel in some cases, they’ve become computers on wheels – some even self-driving. The same applies to smart fridges, watches, thermostats, and yes, even washing machines.

Yet paradoxically, we don’t extend the same digital dignity to the very environments that host computation. Even though data centers are purpose-built to manage IT infrastructure, they are still often reduced to real estate – viewed as “buildings” by facilities teams and as “compute” by IT teams, with little middle ground. That’s no longer sustainable.

The data center as the next unit of compute

This is where AI, and particularly accelerated computing, becomes the inflection point. The increasing density of AI workloads – driven by models with trillions of parameters and GPU clusters pulling 100kW+ per rack – is forcing a rethink of traditional abstractions.

Jensen Huang, Nvidia CEO, has argued that the data center itself is now the unit of compute. That’s a compelling evolution: Chip > Server > Rack > Row > Room > Data Center, with each layer a more integrated and optimized machine, not a loose assembly of parts.

451 Research and others have gone further, suggesting we think of the data center as a machine – not a building – the next unit of compute. Machines have blueprints, tolerance specs, and performance metrics. They are engineered. Measured. Tuned. Repeatable.

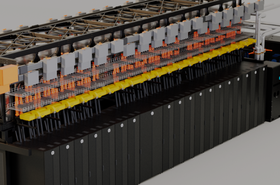

The dramatic expansion in AI training by large cloud and LLM providers has begun to shift the needle and has led to an increase in rack power densities that has finally made the physics-based arguments for liquid cooling – where the IT and thermal are tightly integrated – more persuasive. Innovation is also happening on power delivery and distribution to more closely couple with IT.

Vertiv has been specifically spearheading this IT/OT convergence on several fronts including through its close technology partnerships with leading chipmakers including Nvidia. I recently presented Vertiv’s view on the concept of the data center as the next unit of compute at Nvidia’s GTC event in San Jose California in a joint session with analyst firm IDC. The central attributes of that idea include:

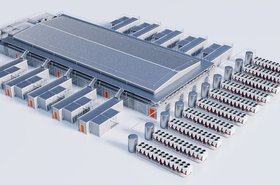

- The overall data center resembles a printed circuit board. That’s power, cooling and IT services all fused together delivering more than 20 percent more energy efficiency, 30 percent more space efficiency, deployed in half the time at 25 percent total cost of ownership saving.

- Another key item is that this approach handles dynamic work loads. It’s like a pit crew where the car zooms in for a pit stop and everything is in sync to refill and change tires. Too much and it's wasted, too little and the race is lost. It’s the same thing for GPUs predicting power and cooling ahead.

Vertiv recently released a complete 7MW reference architecture of the Nvidia GB200 NVL72 platform, co-developed with Nvidia. The reference architecture accelerates the deployment of the Nvidia GB200 NVL72 liquid-cooled rack-scale platform and supports up to 132kW per rack. Vertiv continues its close collaboration with Nvidia on next-generation AI infrastructure, including forthcoming GPU platforms introduced at Nvidia’s flagship GTC 2025 event.

As well as the argument that data centers should be considered as units of compute, there is a related push to classify data centers optimized for AI as ‘AI Factories’ and even the largest hyperscale sites as ‘AI Gigafactories.’ Interestingly, it also continues the narrative of the data center as a building – albeit one dedicated to ‘manufacturing’ AI at scale.

Nvidia is also working with Vertiv and others to deliver on this AI Factory future through advanced digital twin technology, as well as the potential use of AI agents to go beyond even the Data Center Infrastructure Management (DCIM) hype promised nearly a decade ago.

The convergence is inevitable

The road to self-driving data centers is beginning to resemble the road to self-driving cars – longer than expected, but very much underway. Compute is becoming infrastructure. Infrastructure is becoming intelligent. And the data center is evolving from building to machine.

Whether we call them buildings, factories, or computers will eventually become semantics. What matters is recognizing what they’ve become: intelligent, interconnected systems engineered to deliver AI at scale.

More from Vertiv

-

Vertiv, Nvidia, and iGenius partner on AI supercomputer Colosseum

Will be hosted in Italy

-

Sponsored Chill factor: Top liquid cooling considerations for high-density environments

How to successfully implement liquid cooling to manage heat in high-density computing environments

-

Vertiv launches modular prefabricated overhead infrastructure system 'SmartRun'

Power, networking, and cooling for data hall deployments